Photos & Camera

-

WWDC24 -

21:18

21:18

Introducing enterprise APIs for visionOS

Find out how you can use new enterprise APIs for visionOS to create spatial experiences that enhance employee and customer productivity on Apple Vision Pro.

-

21:52

21:52

Build compelling spatial photo and video experiences

Learn how to adopt spatial photos and videos in your apps. Explore the different types of stereoscopic media and find out how to capture spatial videos in your iOS app on iPhone 15 Pro. Discover the various ways to detect and present spatial media, including the new QuickLook Preview Application...

-

22:49

22:49

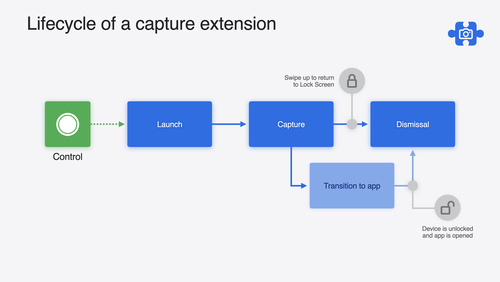

Build a great Lock Screen camera capture experience

Find out how the LockedCameraCapture API can help you bring your capture application's most useful information directly to the Lock Screen. Examine the API's features and functionality, learn how to get started creating a capture extension, and find out how that extension behaves when the device...

-

23:48

23:48

Keep colors consistent across captures

Meet the Constant Color API and find out how it can help people use your app to determine precise colors. You'll learn how to adopt the API, explore its scientific and marketing potential, and discover best practices for making the most of the technology.

-

34:29

34:29

Use HDR for dynamic image experiences in your app

Discover how to read and write HDR images and process HDR content in your app. Explore the new supported HDR image formats and advanced methods for displaying HDR images. Find out how HDR content can coexist with your user interface — and what to watch out for when adding HDR image support to...

-

16:06

16:06

What’s new in DockKit

Discover how intelligent tracking in DockKit allows for smoother transitions between subjects. We will cover what intelligent tracking is, how it uses an ML model to select and track subjects, and how you can use it in your app.

-

-

WWDC23 -

24:53

24:53

Support Cinematic mode videos in your app

Discover how the Cinematic Camera API helps your app work with Cinematic mode videos captured in the Camera app. We'll share the fundamentals — including Decision layers — that make up Cinematic mode video, show you how to access and update Decisions in your app, and help you save and load those...

-

29:12

29:12

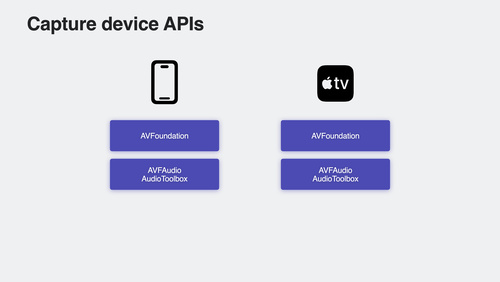

Discover Continuity Camera for tvOS

Discover how you can bring AVFoundation, AVFAudio, and AudioToolbox to your apps on tvOS and create camera and microphone experiences for the living room. Find out how to support tvOS in your existing iOS camera experience with the Device Discovery API, build apps that use iPhone as a webcam or...

-

28:58

28:58

Support HDR images in your app

Learn how to identify, load, display, and create High Dynamic Range (HDR) still images in your app. Explore common HDR concepts and find out about the latest updates to the ISO specification. Learn how to identify and display HDR images with SwiftUI and UIKit, create them from ProRAW and RAW...

-

34:57

34:57

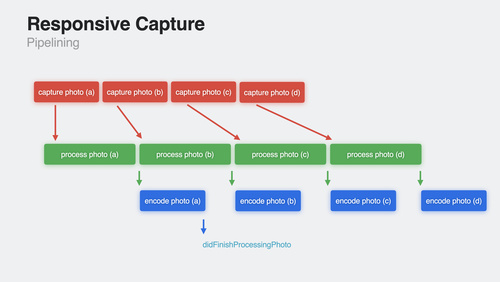

Create a more responsive camera experience

Discover how AVCapture and PhotoKit can help you create more responsive and delightful apps. Learn about the camera capture process and find out how deferred photo processing can help create the best quality photo. We'll show you how zero shutter lag uses time travel to capture the perfect action...

-

13:43

13:43

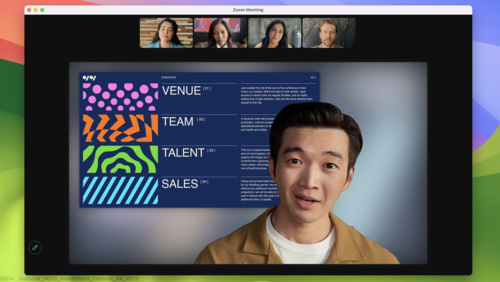

What’s new in ScreenCaptureKit

Level up your screen sharing experience with the latest features in ScreenCaptureKit. Explore the built-in system picker, Presenter Overlay, and screenshot capabilities, and learn how to incorporate these features into your existing ScreenCaptureKit app or game.

-

32:41

32:41

Support external cameras in your iPadOS app

Learn how you can discover and connect to external cameras in your iPadOS app using the AVFoundation capture classes. We'll show you how to rotate video from both external and built-in cameras, support external microphones with USB-C, and perform audio routing. Explore telephony support, tunings...

-

17:35

17:35

Integrate with motorized iPhone stands using DockKit

Discover how you can create incredible photo and video experiences in your camera app when integrating with DockKit-compatible motorized stands. We'll show how your app can automatically track subjects in live video across a 360-degree field of view, take direct control of the stand to customize...

-

14:16

14:16

Embed the Photos Picker in your app

Discover how you can simply, safely, and securely access the Photos Library in your app. Learn how to get started with the embedded picker and explore the options menu and HDR still image support. We'll also show you how to take advantage of UI customization options to help the picker blend into...

-

-

Tech Talks -

14:05

14:05

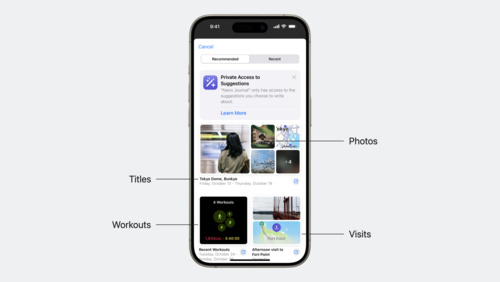

Discover the Journaling Suggestions API

Find out how the new Journaling Suggestions API can help people reflect on the small moments and big events in their lives though your app — all while protecting their privacy. Learn how to leverage the API to retrieve assets and metadata for journaling suggestions, invoke a picker on top of the...

-

12:50

12:50

Discover Reference Mode

Learn how you can match color requirements in demanding pro workflows using Reference Mode on the 12.9-inch iPad Pro with Liquid Retina XDR display. We'll show you how Reference Mode enables you to represent color accurately and provide consistent image representation in workflows like review and...

-

9:16

9:16

QR Code Recognition on iOS 11

iOS 11 provides built-in support to detect and handle QR codes. Discover the supported QR code types, how each type is handled by built-in Camera and Safari apps, and how Universal Links can seamlessly send users to your app when scanning your QR codes.

-

-

WWDC22 -

16:29

16:29

Create parametric 3D room scans with RoomPlan

RoomPlan can help your app quickly create simplified parametric 3D scans of a room. Learn how you can use this API to easily add a room scanning experience. We'll show you how to adopt this API, explore the 3D parametric output, and share best practices to help your app get great results with...

-

19:41

19:41

Bring Continuity Camera to your macOS app

Discover how you can use iPhone as an external camera in any Mac app with Continuity Camera. Whether you're building video conferencing software or an experience that makes creative use of cameras, we'll show you how you can enhance your app with automatic camera switching. We'll also explore how...

-

14:26

14:26

Add Live Text interaction to your app

Learn how you can bring Live Text support for still photos or paused video frames to your app. We'll share how you can easily enable text interactions, translation, data detection, and QR code scanning within any image view on iOS, iPadOS, or macOS. We'll also go over how to control interaction...

-

12:11

12:11

Capture machine-readable codes and text with VisionKit

Meet the Data Scanner in VisionKit: This framework combines AVCapture and Vision to enable live capture of machine-readable codes and text through a simple Swift API. We'll show you how to control the types of content your app can capture by specifying barcode symbologies and language selection...

-

20:36

20:36

Explore EDR on iOS

EDR is Apple's High Dynamic Range representation and rendering pipeline. Explore how you can render HDR content using EDR in your app and unleash the dynamic range capabilities of HDR displays on iPhone and iPad. We'll show how you can take advantage of the native EDR APIs on iOS, provide best...

-

18:22

18:22

Discover advancements in iOS camera capture: Depth, focus, and multitasking

Discover how you can take advantage of advanced camera capture features in your app. We'll show you how to use the LiDAR scanner to create photo and video effects and perform accurate depth measurement. Learn how your app can use the camera for picture-in-picture or multitasking, control...

-

17:41

17:41

Display EDR content with Core Image, Metal, and SwiftUI

Discover how you can add support for rendering in Extended Dynamic Range (EDR) from a Core Image based multi-platform SwiftUI application. We'll outline best practices for displaying CIImages to a MTKView using ViewRepresentable. We'll also share the simple steps to enable EDR rendering and...

-

32:08

32:08

Create camera extensions with Core Media IO

Discover how you can use Core Media IO to easily create macOS system extensions for software cameras, hardware cameras, and creative cameras. We'll introduce you to our modern replacement for legacy DAL plug-ins — these extensions are secure, fast, and fully-compatible with any app that uses a...

-

14:30

14:30

What's new in the Photos picker

PHPicker provides simple and secure integration between your app and the system Photos library. Learn how SwiftUI and Transferable can help you offer integration across iOS, iPadOS, macOS, and watchOS. We'll also show you how you can use AppKit and NSOpenPanel to bring the Photos picker on Mac...

-

22:04

22:04

Display HDR video in EDR with AVFoundation and Metal

Learn how you can take advantage of AVFoundation and Metal to build an efficient EDR pipeline. Follow along as we demonstrate how you can use AVPlayer to display HDR video as EDR, add playback into an app view, render it with Metal, and use Core Image or custom Metal shaders to add video effects...

-

10:00

10:00

Discover PhotoKit change history

PhotoKit can help you build rich, photo-centric features. Learn how you can easily track changes to image assets with the latest APIs in PhotoKit. We'll introduce you to the PHPhotoLibrary change history API and demonstrate how you can persist change tokens across launches to help your app...

-

-

WWDC21 -

16:49

16:49

Build dynamic iOS apps with the Create ML framework

Discover how your app can train Core ML models fully on device with the Create ML framework, enabling adaptive and customized app experiences, all while preserving data privacy. We'll explore the types of models that can be created on-the-fly for image-based tasks like Style Transfer and Image...

-

11:34

11:34

Use the camera for keyboard input in your app

Learn how you can support Live Text and intelligently pull information from the camera to fill out forms and text fields in your app. We'll show you how to apply content filtering to capture the correct information when someone uses the camera as keyboard input and apply it to a relevant...

-

36:02

36:02

What’s new in camera capture

Learn how you can interact with Video Effects in Control Center including Center Stage, Portrait mode, and Mic modes. We'll show you how to detect when these features have been enabled for your app and explore ways to adopt custom interfaces to make them controllable from within your app...

-

9:03

9:03

Explore Core Image kernel improvements

Discover how you can add Core Image kernels written in the Metal Shading Language into your app. We'll explore how you can use Xcode rules and naming conventions for Core Image kernels written in the Metal Shading Language, and help you make sense of Metal's Stitchable functions and dynamic...

-

19:57

19:57

Explore low-latency video encoding with VideoToolbox

Supporting low latency encoders has become an important aspect of video application development process. Discover how VideoToolbox supports low-delay H.264 hardware encoding to minimize end-to-end latency and achieve new levels of performance for optimal real-time communication and high-quality...

-

26:31

26:31

Capture and process ProRAW images

When you support ProRAW in your app, you can help photographers easily capture and edit images by combining standard RAW information with Apple's advanced computational photography techniques. We'll take you through an overview of the format, including the look and feel of ProRAW images, quality...

-

17:58

17:58

Improve access to Photos in your app

PHPicker is the simplest and most secure way to integrate the Photos library into your app — and it's getting even better. Learn how to handle ordered selection of images in your app, as well as pre-selecting assets any time the picker is shown. And for apps that need to integrate more deeply...

-

14:39

14:39

Capture high-quality photos using video formats

Your app can take full advantage of the powerful camera systems on iPhone by using the AVCapture APIs. Learn how to choose the most appropriate photo or video formats for your use cases while balancing the trade-offs between photo quality and delivery speed. Discover some powerful new algorithms...

-

34:16

34:16

Explore HDR rendering with EDR

EDR is Apple's High Dynamic Range representation and rendering pipeline. Explore how you can render HDR content using EDR in your app and unleash the dynamic range capabilities of your HDR display including Apple's internal displays and Pro Display XDR. We'll show you how game and pro app...

-

-

WWDC20 -

6:12

6:12

Build Metal-based Core Image kernels with Xcode

Learn how to integrate and load Core Image kernels written in the Metal Shading Language into your application, and discover how you can apply these image filters to create unique effects. Explore how to use Xcode rules and naming conventions for Core Image kernels written in Metal Shading...

-

8:37

8:37

Optimize the Core Image pipeline for your video app

Explore how you can harness the processing power of Core Image and optimize video performance within your app. We'll show you how to build your Core Image pipeline for applying effects to your video in your apps: Discover how to reduce your app's memory footprint when using CIContext, and learn...

-

14:33

14:33

Meet the new Photos picker

Let people select photos and videos to use in your app without requiring full Photo Library access. Discover how the PHPicker API for iOS and Mac Catalyst ensures privacy while providing your app the features you need. PHPicker is the modern replacement for UIImagePickerController. In addition...

-

24:35

24:35

Explore Computer Vision APIs

Learn how to bring Computer Vision intelligence to your app when you combine the power of Core Image, Vision, and Core ML. Go beyond machine learning alone and gain a deeper understanding of images and video. Discover new APIs in Core Image and Vision to bring Computer Vision to your application...

-

14:17

14:17

Handle the Limited Photos Library in your app

Access the photos and videos you need for your app while preserving privacy. With the new Limited Photos Library feature, people can directly control which photos and videos an app can access to protect their private content. We'll explore how this feature may affect your app, and take you...

-

7:16

7:16

Discover Core Image debugging techniques

Find and fix rendering and optimization issues in your Core Image pipeline with Xcode environment variable. Discover how you can set the environment variable for visualizing your Core Image graphs. You'll learn how to generate Core Image graphs and how to interpret them to discover memory, color,...

-

23:58

23:58

Capture and stream apps on the Mac with ReplayKit

Learn how you can integrate ReplayKit into your Mac apps and games to easily share screen recordings or broadcast live audio and visuals online. We'll show you how to capture screen content, audio, and microphone input inside your Mac apps, and even broadcast your video to a live audience. For...

-

-

WWDC19 -

15:33

15:33

Introducing Photo Segmentation Mattes

Photos captured in Portrait Mode on iOS 12 contain an embedded person segmentation matte that made it easy to create creative visual effects like background replacement. iOS 13 leverages on-device machine learning to provide new segmentation mattes for any captured photo. Learn about the new...

-

39:50

39:50

Understanding Images in Vision Framework

Learn all about the many advances in the Vision Framework including effortless image classification, image saliency, determining image similarity, and improvements in facial feature detection, and face capture quality scoring. This packed session will show you how easy it is to bring powerful...

-

60:34

60:34

Advances in Camera Capture & Photo Segmentation

Powerful new features in the AVCapture API let you capture photos and video from multiple cameras simultaneously. Photos now benefit from semantic segmentation that allows you to isolate hair, skin, and teeth in a photo. Learn how these advances enable you to create great camera apps and easily...

-

44:40

44:40

Introducing Multi-Camera Capture for iOS

In AVCapture on iOS 13 it is now possible to simultaneously capture photos and video from multiple cameras on iPhone XS, iPhone XS Max, iPhone XR, and the latest iPad Pro. It is also possible to configure the multiple microphones on the device to shape the sound that is captured. Learn how to...

-

-

WWDC17 -

29:05

29:05

High Efficiency Image File Format

Learn the essential details of the new High Efficiency Image File Format (HEIF) and discover which capabilities are used by Apple platforms. Gain deep insights into the container structure, the types of media and metadata it can handle, and the many other advantages that this new standard affords.

-

58:39

58:39

Capturing Depth in iPhone Photography

Portrait mode on iPhone 7 Plus showcases the power of depth in photography. In iOS 11, the depth data that drives this feature is now available to your apps. Learn how to use depth to open up new possibilities for creative imaging. Gain a broader understanding of high-level depth concepts and...

-

-

WWDC16 -

20:44

20:44

AVCapturePhotoOutput - Beyond the Basics

Continue your learning from Session 501: Advances in iOS Photography, with some additional details on scene monitoring and resource management in AVFoundation's powerful new AVCapturePhotoOutput API.

-

59:42

59:42

Advances in iOS Photography

People love to take pictures with iPhone. In fact, it's the most popular camera in the world, and photography apps empower this experience. Explore new AVFoundation Capture APIs which allow for the capture of Live Photos, RAW image data from the camera, and wide color photos.

-